Insights

Part I: From scenic to semantic

Designing in the age of intelligence

By

Doug Cook

—

1

Aug

2025

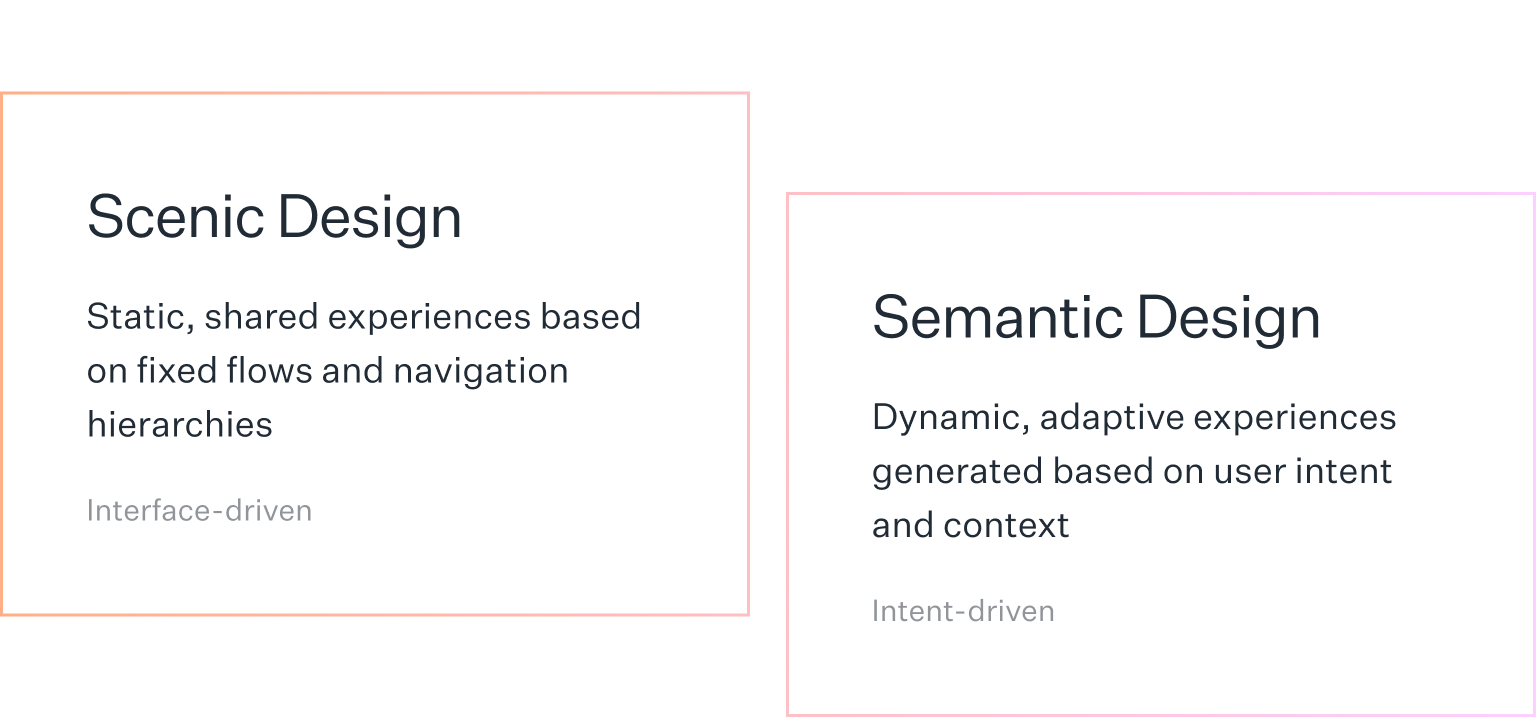

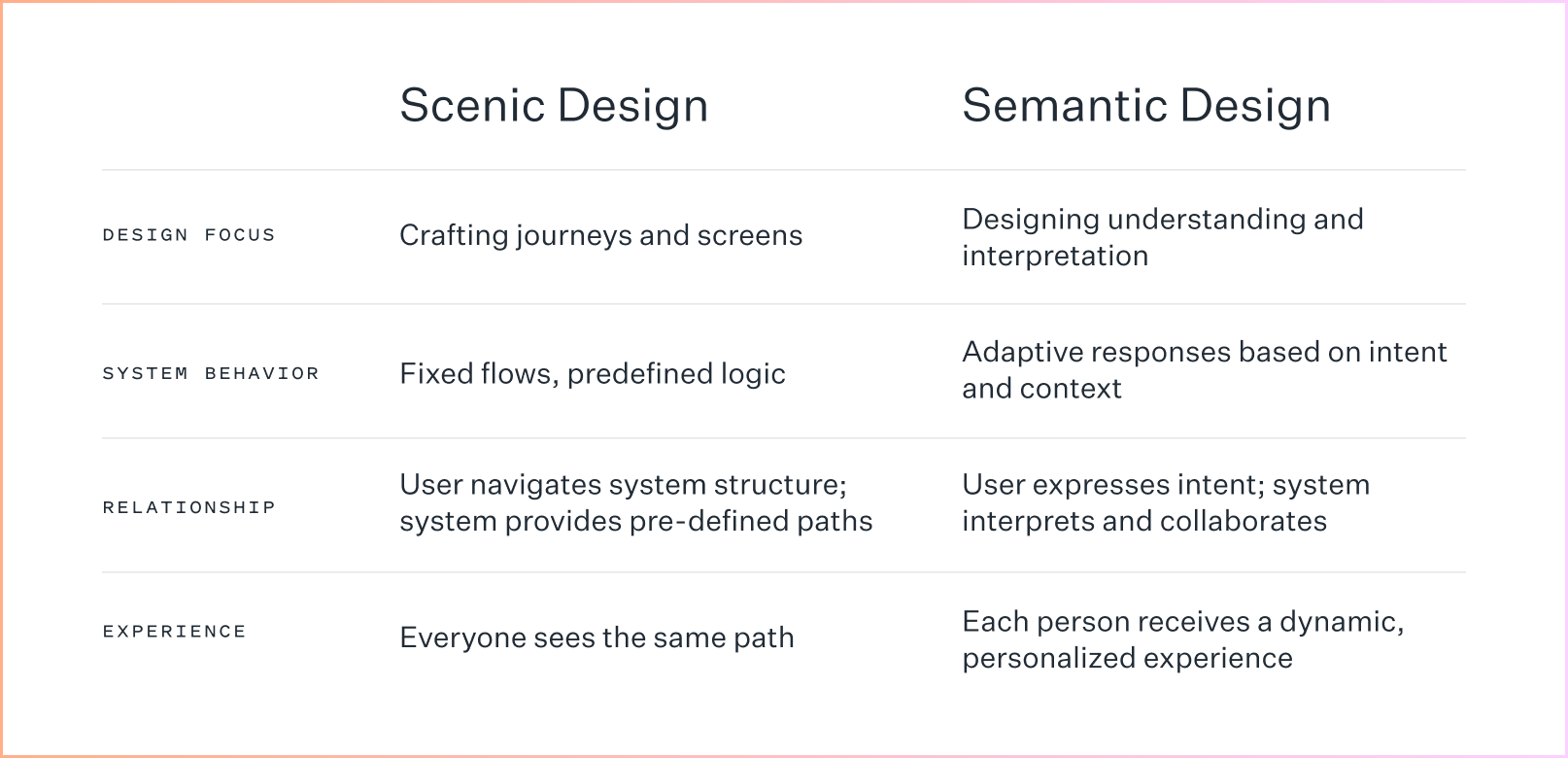

For decades, digital design was scenic, focused on a visual landscape of interfaces. We crafted beautiful buttons, perfected navigation hierarchies, and guided users through carefully choreographed journeys from screen to screen. The interface was the destination: load the right view, click the right control, follow a designed path.

But intent is semantic. It’s driven by meaning, purpose, and the unspoken why behind each action. As AI reshapes our relationship with technology, we’re shifting from scenic design (set paths and structured journeys) to semantic design (what systems understand).

The interface as destination

In the scenic paradigm, every digital experience followed a familiar script. Users navigated URLs, scanned menus, and translated their goals into the language of the interface. Click “Travel,” then “Flights,” then fill out departure, arrival, dates, and passengers. The burden was always on the user to map their intent onto the system’s structure.

This worked when interfaces were simple and tasks were predictable. But as workflows grew more complex, the scenic approach began to break down. Interfaces demanded users become experts in the system rather than their own goals.

Consider Sarah, a product manager preparing for her team’s next release. She’s balancing deadlines with a lean team, trying to stay ahead of stakeholder feedback while keeping engineers unblocked.

In the scenic world, she opens her project management tool to create epics and user stories, checks her analytics for usage data, reviews mockups in her design tool, schedules stakeholder reviews in her calendar, pings engineers on Slack, and updates timelines on her roadmap. Each tool requires her to translate her goals into a different brand of interface logic.

From commands to context

We’re in a transitional moment where interfaces still offer explicit choices and manual controls, but beneath the surface, semantic understanding is beginning to emerge. Search engines understand natural language. Email clients draft replies. Design tools suggest layouts based on content.

Instead of asking, “What’s the optimal flow to help users accomplish this?” we’re learning to ask, “How can systems understand and adapt to what users are trying to accomplish?” But this transition is messy. We’re building systems that understand context, but still forcing users to interact through scenic metaphors.

Understanding over interface

Semantic design flips this relationship. In a semantic system, Sarah simply describes what she wants to accomplish: “We’re releasing the new collaboration feature next month. I need to coordinate final testing with engineering, get design sign-off, update stakeholders on timeline changes, and prep our rollout plan.”

The system understands her goals and orchestrates a response across project management, design reviews, communication planning, and delivery. She’s no longer jumping between tools; she’s working with a system that understands what needs to happen and helps her get there.

These systems restore our agency, evolving with our goals rather than guiding us through predefined flows. But this shift isn’t without complexity.

The challenge ahead

As semantic systems grow more capable, designers face a new set challenges:

Agency

When systems infer intent, users risk becoming dependent on system reasoning rather than maintaining their own understanding. How do we preserve human agency while reducing cognitive load?

Transparency

Unlike traditional interfaces with visible controls, semantic systems make decisions behind the scenes. How do we design clear explanations of system reasoning and decision paths?

Control

When systems interpret rather than execute, they can get it wrong. How do we preserve the ability to guide, override, or redirect, especially when the stakes are high?

Context

These systems rely on rich context to interpret intent. How do we gather and apply that context thoughtfully, acting on environmental signals, personal history, and situational cues without sacrificing privacy or reinforcing bias?

Experience

Semantic efficiency risks erasing the moments that build brand and meaning. How do we preserve emotional engagement and experiential choice when systems optimize for speed and outcomes?

Ethics

As systems shape decisions and learn from behavior, they carry moral weight. How do we maintain ethical responsibility and ensure systems align with human values, not just optimize for metrics?

Meeting these challenges will require new ways of thinking about user experience.

Designing for semantic interaction

The interface is no longer a frame; the system is the experience. With systems becoming more adaptive and contextual, we’re shifting from designing screens to designing intelligence. That means shaping the conditions for understanding: how systems engage with ambiguity, context, and the nuance of human goals.

For designers, this marks a shift in responsibility. We need to rethink both how we work and what we value:

Think in systems, not screens

Semantic design unfolds across touchpoints, platforms, and time. A single intent might surface in multiple contexts, across different devices. It’s less about where a button goes, and more about when, how, and why intelligence appears.

Focus on relationships

Think of design as relational, not just visual. Focus on how voice, gesture, spatial, and visual cues support collaboration. Use dialogue to explore how these relationships develop and adapt over time.

Design for ambiguity

Unlike interfaces with clear affordances, semantic systems must handle uncertainty, multiple interpretations, and evolving intent. Design guardrails that support user agency, set boundaries for system behavior, and clarify what’s negotiable versus what’s fixed.

Test for understanding

Traditional usability testing asks, “Can users complete the task?” Semantic testing asks, “Does the system understand what users actually want to accomplish?” Test for alignment, not just transactional success.

Define agency and presence

When is the system a guide, and when is it a partner? When should it take initiative, and when should it defer? How does it modulate between machine-led and human-led flows? Design for what’s desirable, not just what’s possible.

Beyond automation to alignment

Interfaces won’t disappear. They’ll become more ephemeral, adaptive, and aligned with intent. As systems grow more interpretive, the human side of design becomes more critical, not less.

Semantic design requires deep empathy for how people think, work, and make decisions. This means designing systems that explain their reasoning, invite correction, and maintain transparency, even as they handle complexity. The goal is to create systems that think with people, not for people.

This is a fundamental shift in how we approach digital design: from crafting beautiful, usable interfaces to building intelligent partnerships. For designers, it promises interfaces that don’t just look intuitive—they actually are.

Continue reading the full series on Human Computing

Part 2: Human Computing (The Manifesto)

Part 3: Beyond the interface (The Framework)

Got an idea or something to share? Subscribe to our newsletter and follow the conversation on LinkedIn!

Doug Cook

Doug is the founder of thirteen23. When he’s not providing strategic creative leadership on our engagements, he can be found practicing the time-honored art of getting out of the way.